NSX Managers: Difference between revisions

m (clean up) |

m (Cleaning up wiki tables) |

||

| Line 53: | Line 53: | ||

! Item | ! Item | ||

! Parameter | ! Parameter | ||

|- | |- | ||

| [https://www.notion.so/NSX-Manager-Node-5e41a0c6d9904655abfe69898a1c6ea7?pvs=21 NSX Manager Node #] | |||

| 1 | |||

|- | |- | ||

| [https://www.notion.so/vSphere-Cluster-c2b1e1ef59164a3db5fecdbedab82e58?pvs=21 vSphere Cluster] | |||

| Management | |||

|- | |- | ||

| [https://www.notion.so/Host-910f8dfc9cbc4f45ad8ab57851814bde?pvs=21 Host] | |||

| <let vSphere decide> | |||

|- | |- | ||

| [https://www.notion.so/Storage-795a59ee2f5b41919c3bd530131eea1e?pvs=21 Storage] | |||

| VSAN | |||

|- | |- | ||

| [https://www.notion.so/Hostname-461e34a07746475e867f5843ad3cdae9?pvs=21 Hostname] | |||

| nsx-m-a-01 | |||

|- | |- | ||

| [https://www.notion.so/IP-Address-e4a1287f2a48459bbd32c14315035a81?pvs=21 IP Address] | |||

| 192.168.110.10 | |||

|- | |- | ||

| [https://www.notion.so/Subnet-mask-677264edfa4841f29d315af857703e14?pvs=21 Subnet mask] | |||

| 255.255.255.0 | |||

|- | |- | ||

| [https://www.notion.so/Port-Group-9b665705d5b24e1bb5ce6190fa011ad4?pvs=21 Port Group] | |||

| Site-A-VDS-01-PG-Management-VLAN10 | |||

|- | |- | ||

| [https://www.notion.so/DNS-Servers-3f52c492e9de4fc4918476ca6a30e9dc?pvs=21 DNS Servers] | |||

| 192.168.110.10 | |||

|- | |- | ||

| [https://www.notion.so/NTP-Servers-dd31b1f3e1404f3396f023b1347998b4?pvs=21 NTP Servers] | |||

| ntp.corp.local | |||

|- | |- | ||

| [https://www.notion.so/admin-account-Password-77f07829a24e4c3cad9ccb571f21ef23?pvs=21 admin account Password] | |||

| VMware1!VMware1! | |||

|- | |- | ||

| [https://www.notion.so/CLI-account-Password-96aa2c16257949119b874b82b2a7282b?pvs=21 CLI account Password] | |||

| VMware1!VMware1! | |||

|- | |- | ||

| [https://www.notion.so/audit-account-Password-278ae1c1d54247c9853bea40c7167ae9?pvs=21 audit account Password] | |||

| VMware1!VMware1! | |||

|} | |} | ||

| Line 178: | Line 178: | ||

! Item | ! Item | ||

! Parameter | ! Parameter | ||

|- | |- | ||

| [https://www.notion.so/VIP-IP-address-2cca7ac1eabf443f9573c35e4ef56340?pvs=21 VIP IP address] | |||

| 10.10.10.10/24 | |||

|} | |} | ||

Revision as of 08:48, 15 January 2024

The NSX Manager Nodes are responsible for the control and management plane of the NSX Architecture. Without at least one NSX Manager it is not possible to install (and manage) the services that are offered by NSX. In this chapter we are going to focus on the deployment of the NSX Manager nodes on top of VMware vSphere and VSAN using VMware the VMware Software Defined Data Center (SDDC) ecosystem.

NSX Manager Cluster Design

NSX can be deployed and operated using one single NSX Manager Node. However, when you are dealing with a production environment it is more than usual to take availability and recoverability into account to all implemented components in the chain that form your complete architecture. This also applies for the NSX Manager.

To allow availability and recoverability on the NSX Manager level you need to deploy the NSX Manager nodes in a quorum. This means you need to deploy the NSX Manager Nodes in a cluster consisting of three NSX Manager nodes.

When one NSX Manager Node is no longer available for whatever reason another one can take over the functionality.

The NSX Manager nodes will replicate its configuration to each other using an internal replication process.

When one NSX Manager fails this does not have impact on the management, control, and data plane. When two NSX Managers fail this will not have impact on the control and data plane. The Management plane however will be impacted as the NSX Manager that is left in the cluster will be restricted to read-only mode and therefore no changes can be mode on the environment. When all three NSX Managers fail this this does not have impact on the data plane, but the management and control plane will not be available.

Preparing and deploying the first NSX Manager Node + prerequisites

Before you deploy your first NSX Manager Node you need to have the following prerequisites in place:

Hosting (Compute and storage)

- One of the Compute, Storage (vSphere) infrastructures deployed that were described in the previous section.

- Enough available compute resources (CPU and RAM) to deploy three NSX Manager Nodes.

- Enough available storage to deploy three NSX Manager Nodes.

- When your storage is not highly available (like VSAN) make sure you are using three different data stores that lead to different physical storage. Make sure when using three different physical storage destinations the performance in terms of speed and IOPs is the same.

Network

- At least three hostnames

- At least three IP address with default gateway

- A fourth IP address may be required depending on if you want to configure a VIP IP address between the clustered NSX Manager Nodes.

- At least one management VLAN

- At least one DNS server with the forward and reverse DNS A records configured for all the IP addresses you have allocated

- At least one NTP server

Passwords

- A password for the “admin” account

- A password for the “CLI” account

- A password for the “audit” account

It is best practice that you document all the information that you need for the deployment in a separate “configuration workbook” and use the parameters you determined up front for a speedy and successful deployment of the first NSX Manager Node. Throughout this book I will describe different parts of the overall config workbook relevant to the section/chapter. At the end of this book, I will provide you a full config workbook that you can use as a template for your own installation.

For now, I will start with the configuration items required that you need to deploy the first NSX Manager Node.

Table 1: Configuration Workbook | Deploying the first NSX Manager Node

| Item | Parameter |

|---|---|

| NSX Manager Node # | 1 |

| vSphere Cluster | Management |

| Host | <let vSphere decide> |

| Storage | VSAN |

| Hostname | nsx-m-a-01 |

| IP Address | 192.168.110.10 |

| Subnet mask | 255.255.255.0 |

| Port Group | Site-A-VDS-01-PG-Management-VLAN10 |

| DNS Servers | 192.168.110.10 |

| NTP Servers | ntp.corp.local |

| admin account Password | VMware1!VMware1! |

| CLI account Password | VMware1!VMware1! |

| audit account Password | VMware1!VMware1! |

Table 1: Configuration Workbook | Deploying the first NSX Manager Node (database)

Deploying two NSX Manager Nodes and forming an NSX Manager Cluster

When the first NSX Manager Node is successfully deployed, you can now start deploying the second and third NSX Manager Nodes. You can deploy the remaining NSX Manager Nodes trough the UI of the first deployed NSX Manager, or you can deploy a new OVA (the same way you deployed your first NSX Manager Node) and register them afterwards with each other.

Depending on your requirements you can choose to deploy the NSX Managers in different ways on your vSphere Clusters and network.

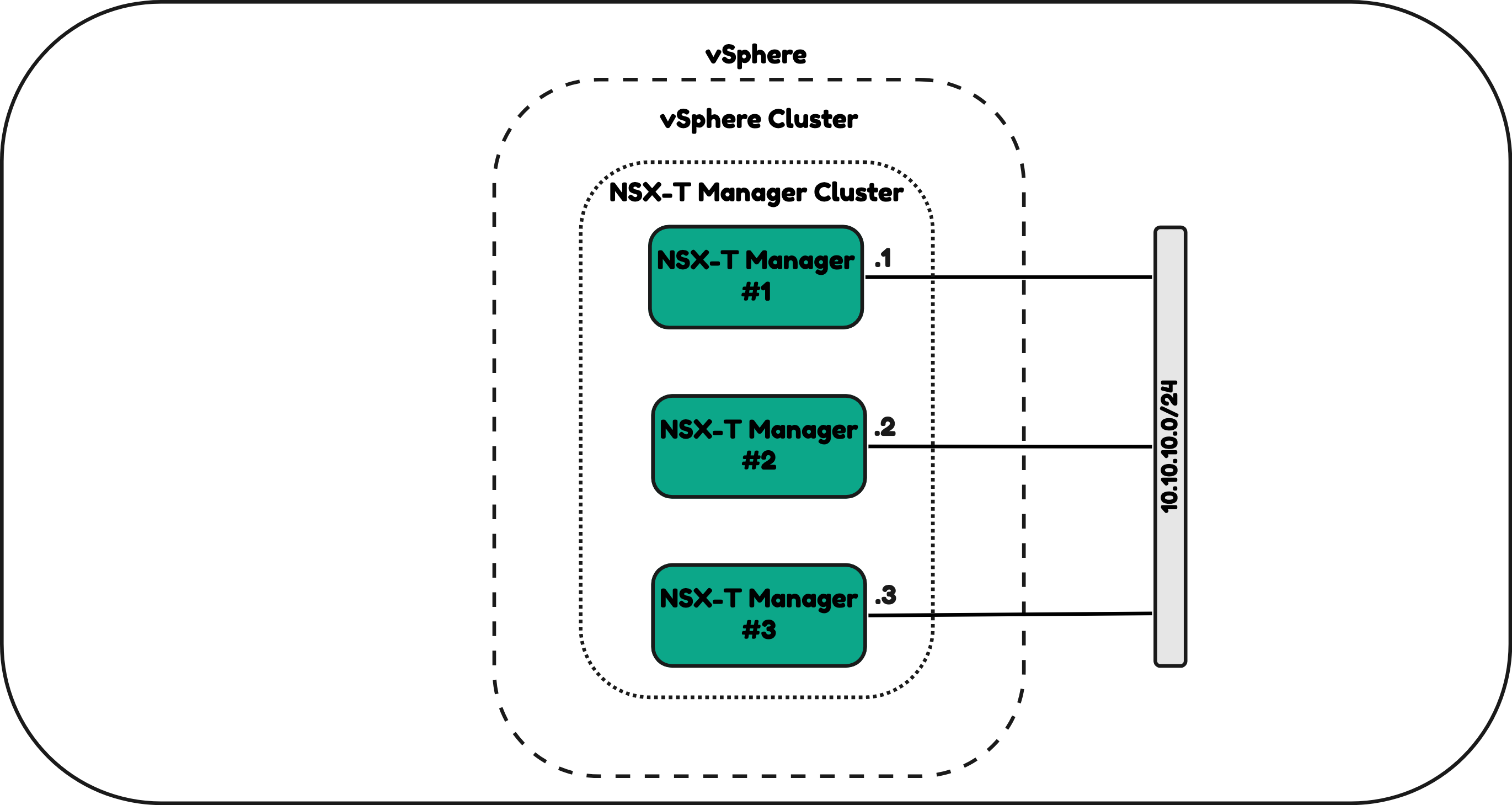

vSphere Cluster

- Use a single vSphere Cluster for all NSX Manager Nodes

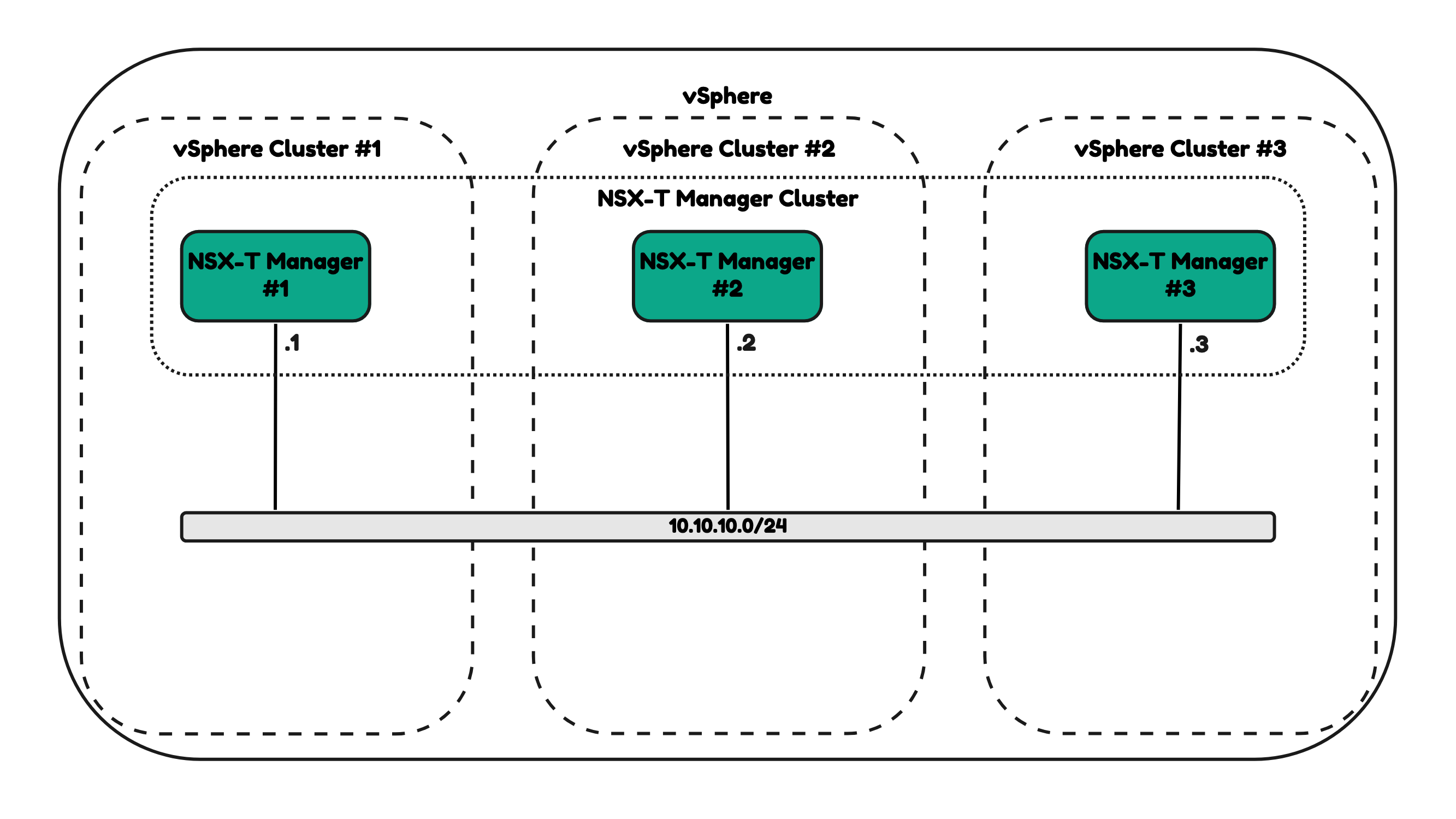

- Use multiple vSphere Clusters for all NSX Manager Nodes

Subnets

- Use a single subnet where all NSX Manager Nodes are connected to

- Use multiple subnets where all NSX Manager Nodes are connected to

VIP or Load Balancer

In Figure 7 you will see that I have deployed all NSX Manager Nodes in the same vSphere Cluster. When this vSphere cluster goes down this will have an impact on all NSX Manager Nodes.

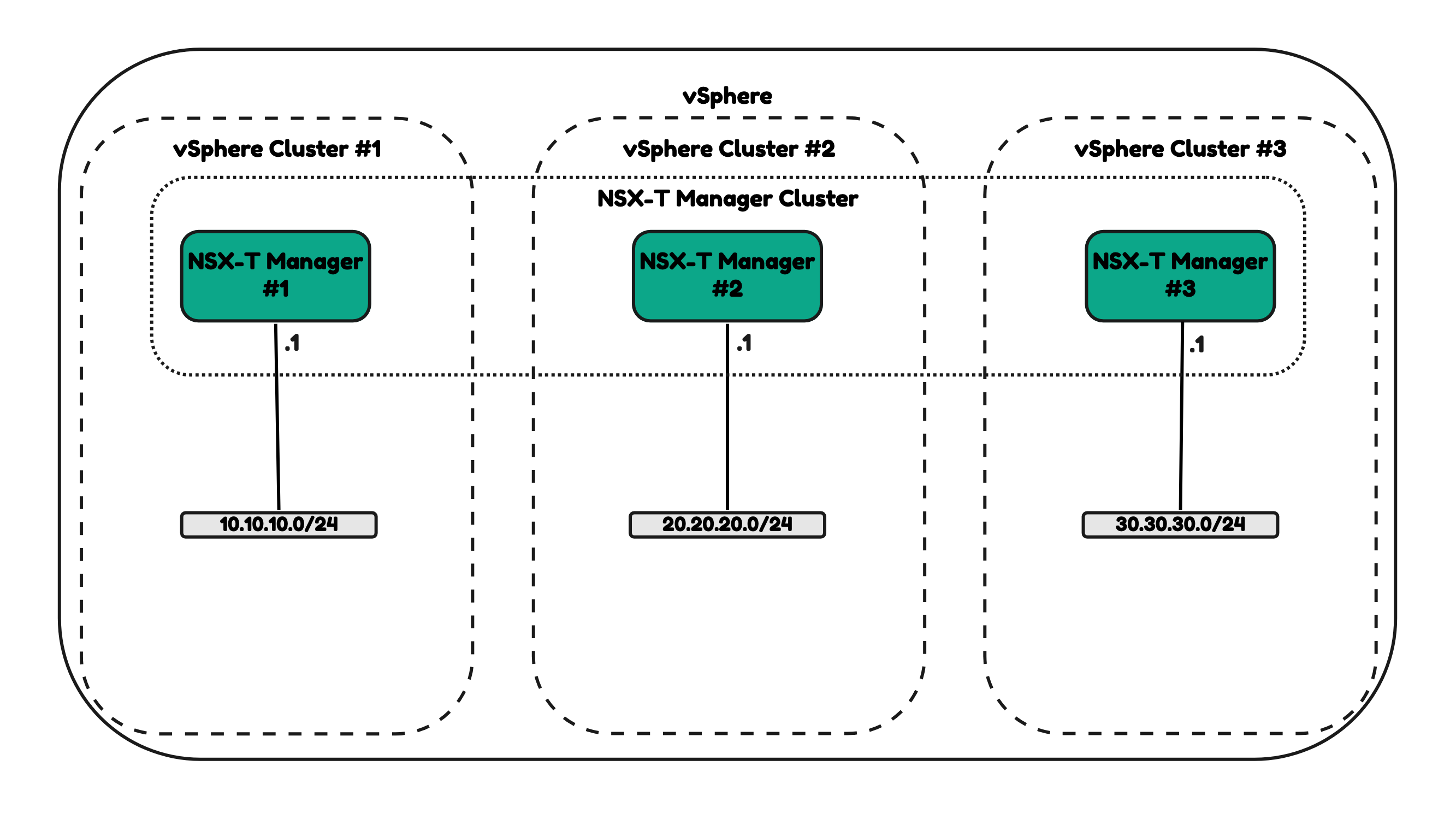

When you want to mitigate the “single vSphere Cluster” failure domain you can choose to deploy the NSX Manager nodes on three different vSphere Clusters (Figure 8).

One constraint that you need to be aware of if you are deploying NSX Manager across multiple clusters and possibly across multiple physical locations the round trip delay (RTT) between the NSX Manager Nodes cannot be more than 10 milliseconds.

In Figure 7 and Figure 8 you have seen that I have used the same subnet (10.10.10.0/2) to deploy all NSX Manager Nodes.

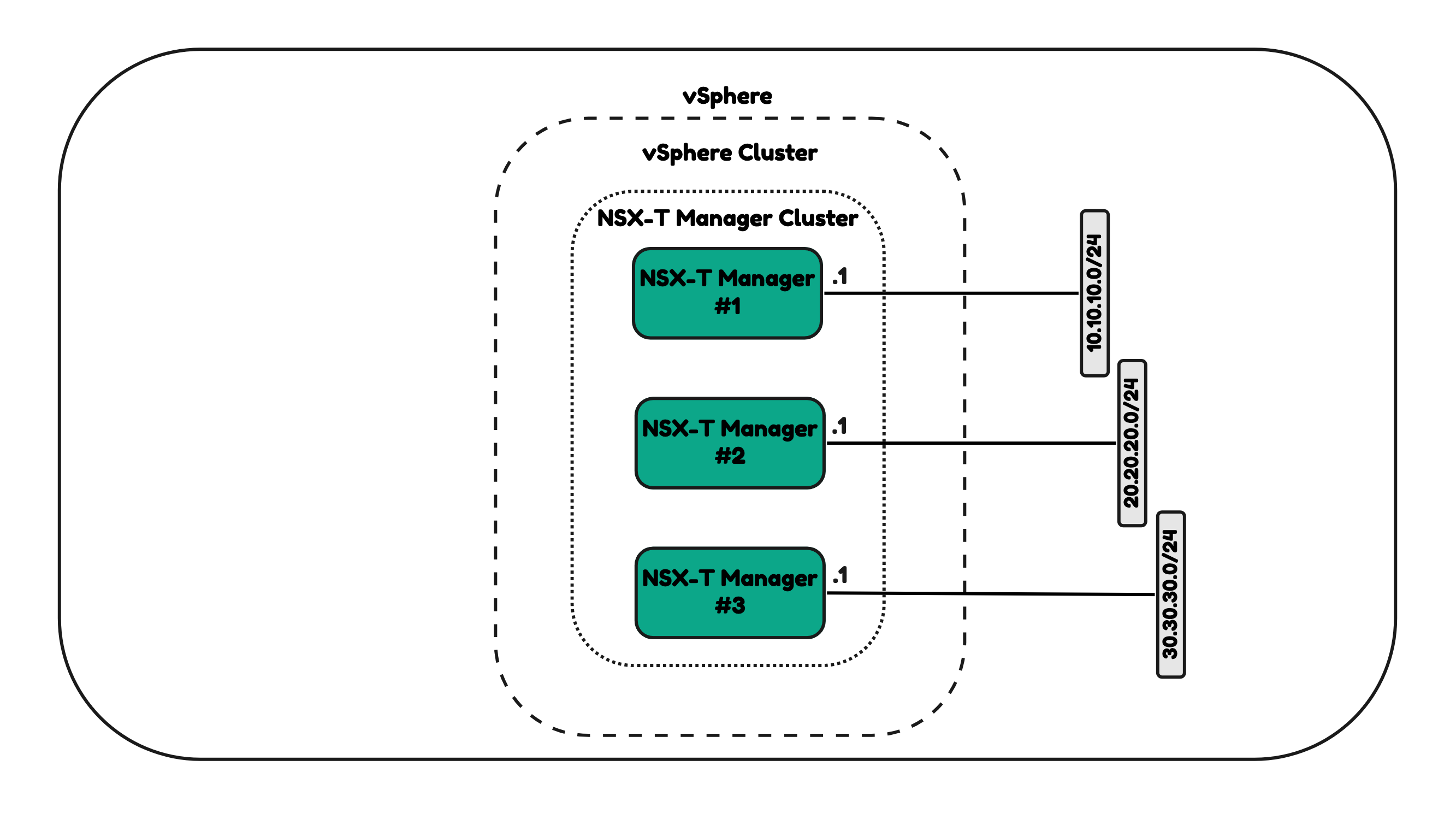

Figure 9 and Figure 10 will use different subnets.

Using a VIP IP address with the NSX Manager Cluster

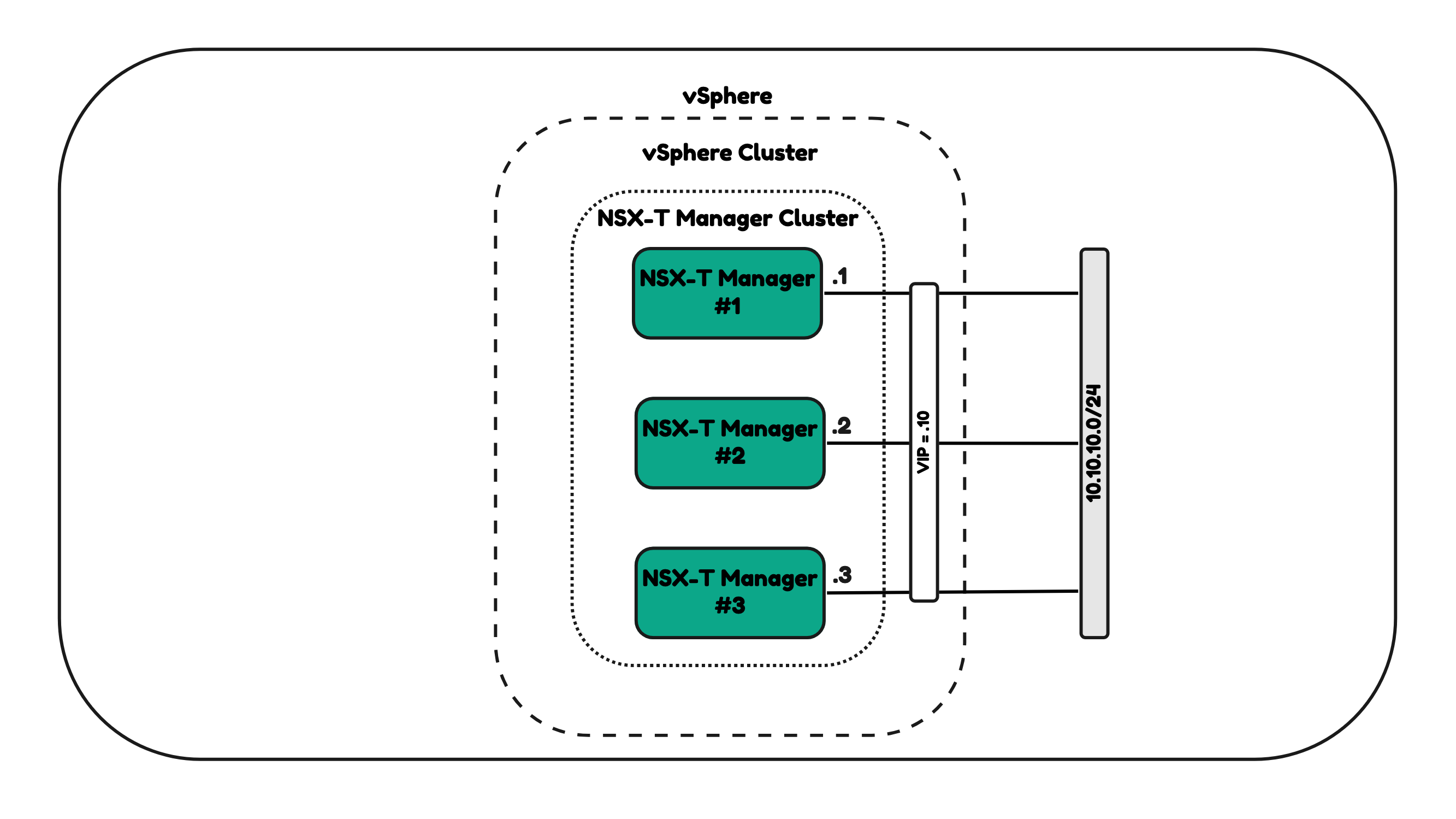

When you are using the same subnet to deploy the NSX Manager Nodes on you can make use of the internal NSX VIP address (Figure 11).

You will be able to connect to this internal VIP address and the NSX Manager Cluster will designate one of the NSX Manager Nodes as the “Master” and this will be the NSX Manager that will respond to all your requests when trying to access the NSX UI.

When using the internal VIP address the requests when you are trying to manage NSX will only go to one NSX Manager Node in the cluster and will not be load balanced.

In the example illustrated in Figure 11 you see that all the NSX Manager Nodes are on the 10.10.10.0/24 network. The internal VIP address that is assigned is 10.10.10.10/24. And all the requests will go either to 10.10.10.1, 10.10.10.2 or 10.10.10.3 in this case when using the internal VIP address.

You will still be able to access the NSX Manager Nodes individually by using its own Node IP address.

Table 2: Configuration Workbook | Internal NSX cluster VIP address

| Item | Parameter |

|---|---|

| VIP IP address | 10.10.10.10/24 |

Table 2: Configuration Workbook | Internal NSX cluster VIP address

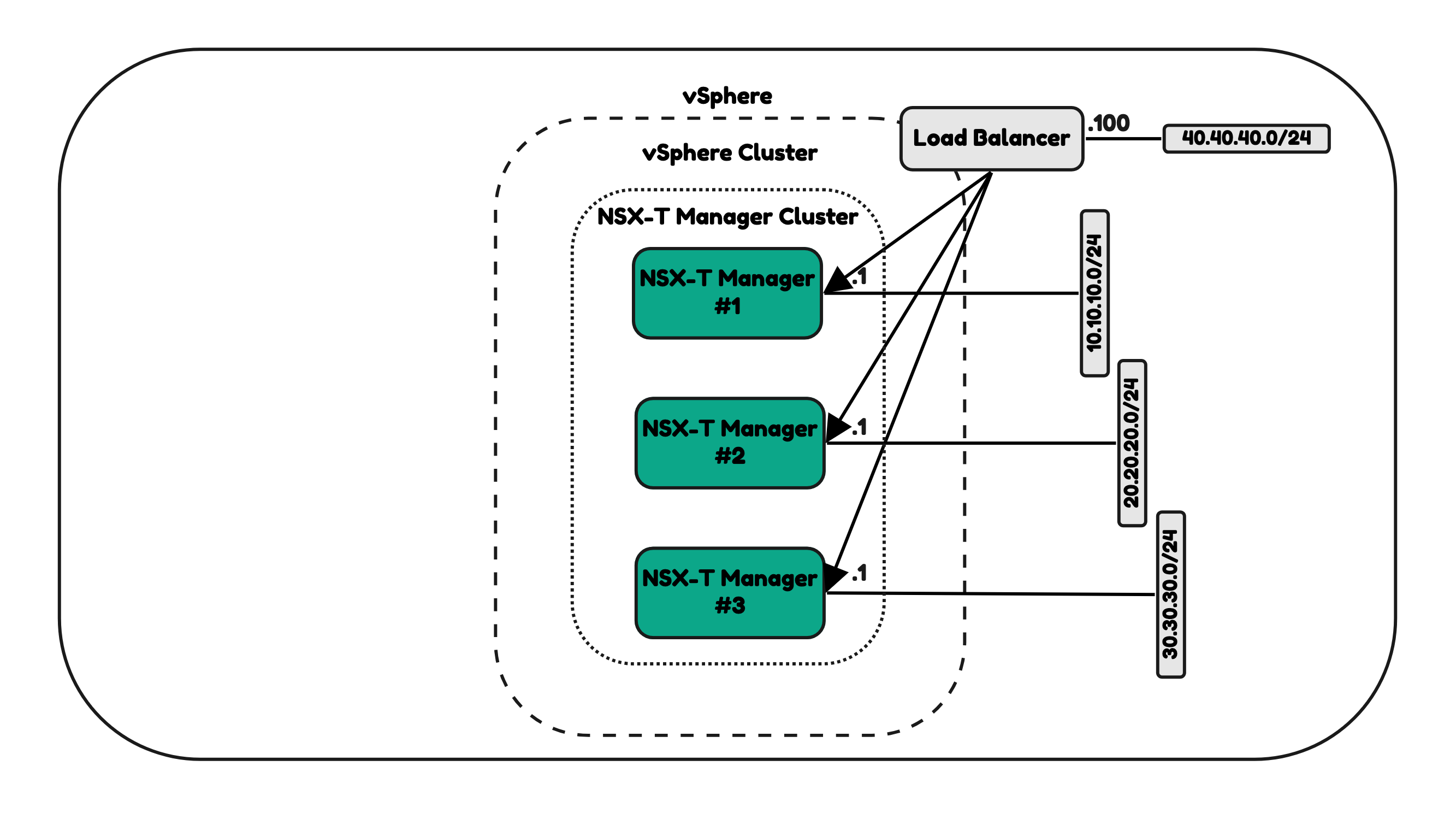

Using an external Load Balancer NSX Manager Cluster

When your NSX Managers are not in the same network, you can use an external load balancer to balance the load between the NSX Manager Nodes in the cluster.

You will be able to connect to this external VIP address and one of the NSX Manager will respond to your request when trying to access the NSX UI depending on the algorithm the external load balancer is using to balance the load.

When using an external load balancer VIP address the requests when you are trying to manage NSX can go to all NSX Manager Nodes in the cluster and will be load balanced.

In the example illustrated in Figure 12 you see that all the NSX Manager Nodes are different networks. The first node is on the 10.10.10.0/24 network, the second node is on the 20.20.20.0/24 network and the third node is on the 30.30.30.0 network. The external load balancers VIP address that is assigned is 40.40.40.100/24. And all the requests will go either to 10.10.10.1, 20.20.20.1 or 30.30.30.1 in this case when using the external load balancers VIP address.

The main differences with using the internal NSX VIP address is not only that the NSX Manager nodes are in a different network, but all NSX Manager nodes will actively participate with replying when the UI is consulted where with the internal NSX VIP address only ONE NSX Manager node will participate in the reply when the UI is consulted.

You will still be able to access the NSX Manager Nodes individually by using its own Node IP address.