Running NSX on top of vSAN (with vSAN Calculator in Google Spreadsheet)

I am currently working for a customer that is using NSX and vSAN combined. There is a huge storage / operational dependancy when NSX is used on to of vSAN. When we place NSX components on vSAN Storage we need to be aware of how the data behaves on vSAN datastores and how this affects the NSX components.

NSX is typically deployed on top of three vSphere clusters: 1) The Management Cluster 2) The Edge Cluster 3) The Compute (or Resource) Cluster

For all three vSphere cluster types we can configure a vSAN cluster.

NSX Components

Different NSX components (or components that NSX needs for integration) are deployed across these different vSphere / vSAN clusters:

- The Platform Service Controller, The vCenter Server and the NSX Manager are typically placed on the Management cluster - The Controllers, Edge Services Gateways and Distributed Logical Router Control VM are typically placed on the NSX Edge cluster - When the Distributed Logical Router Control VM is not stored on the NSX Edge cluster this can also be placed on the Compute / Resource Cluster

Where to place the Distributed Logical Router Control VM and the "rules" around these (in combination with the Edge Services Gateway) can be found here.

From a vSAN perspective the above NSX components are nothing more that a "VM" with data. This data can be redundantly stored depending on the storage policy selected. This storage policy takes the Fault Tolerance Method (FTM) and Failures to Tolerate (FTT) into account when storing data. Based on the selected settings (FTM and FTT) a certain amount of data is available for usable storage. VMFS takes roughly 1% of data off and based on the FTM less data will be usable because the amount of copies / parity is stored across multiple Fault Domains (FD). vSAN needs 20% (30% is recommended) of free space to operate with its best performance. So in total we either loose 21% or 31% of data "eaten" by the vSAN storage system.

Now Fault Domains are pretty important if you want to protect your data not only from Disk or Host Failures, but also from Rack, Room or even complete Site failures. Proper planning around this need to be done based on your design and infrastructure and there are plenty od Guides / Blogs available that will help you with this.

One of them that is a MUST read is the blog article of Tom Twyman.

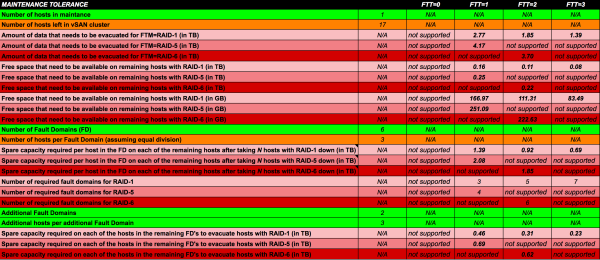

vSAN Maintenance Tolerance

Tom not only gives a good overview on the way the data is stored, but also takes something very important into account which is "Maintenance Tolerance". A new phrase for me before I read the article, but this is important if you need to do maintenance on a host that have vSAN configured and that are part of a vSAN cluster. Before maintenance can be done "safely" the data need to be evacuated to other hosts. Based on how you have your Failure Domains configured this needs proper planning / forecasting. What is important is that we need to calculate based on the current vSAN storage capacity within a cluster if there is enough free disk space available across or within the configured Fault Domains to evacuate the data to.

When you place a host that is part of a vSAN cluster into maintenance mode you get three options:

- Do Nothing

- The host will go down and no data is migrated

- Ensure Accessibility

- Only the “required data is migrated (based on the VM policies set)

- This will take less time to migrate data then option 3

- Full Data Migration

- All the data will be migrated

- This will take the most time

What is also important is to take “maintenance” into account. When we need to do maintenance (upgrades) then we need to make sure the data of the host is evacuated to other hosts. We can evacuate data within a fault domain, which means you need to make sure you have enough hosts (or storage capacity) available that can hold the capacity of the amount of hosts that you need to service. Or we can evacuate data across fault domains, this means that the other hosts where the data is evacuated to need to have storage capacity available on the hosts, and what is also important is that this fault domain does NOT host a copy of the object that needs to be evacuated. So there are a lot of constraints here which makes planning difficult.

After reading Tom's blog post I have decided to create my own vSAN Calculator.

Tom's blog post and my self created calculator below helped me understanding how vSAN works and how to place this into the context of the NSX components.

vSAN Storage and Maintenance Tolerance calculator

This can be found here.

I suggest to DOWNLOAD or CLONE it so you can use it "locally" so that there is no interference when multiple people are using it at the same time.

If this is not possible please let me know by sending me a message on LinkedIn.

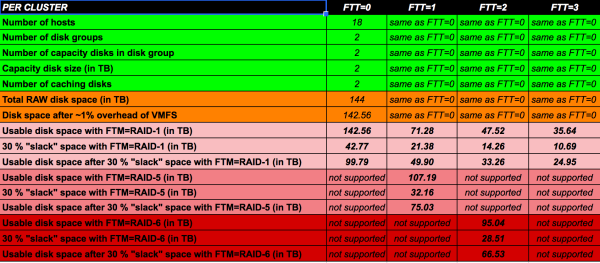

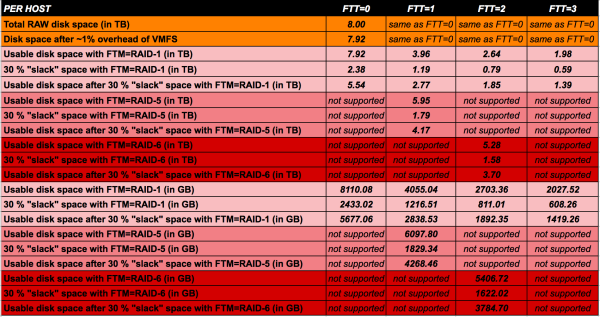

This calculator will let you calculate:

- The amount of RAW disk space per vSAN cluster

- Per various Fault Tolerance Methods

- Per Failures to Tolerate

- The amount of usable disk space per vSAN cluster

- Per various Fault Tolerance Methods

- Per Failures to Tolerate

- The amount of free disk space required (on other hosts) when hosts are placed into maintenance

- Per various Fault Tolerance Methods

- Per Failures to Tolerate

- Per Fault Domain

Only change the green fields based on your requirement.

From a "compute" perspective the NSX component protection is explained in the table below:

| vSphere | |

|---|---|

| Management Cluster | NSX Manager Protected by vSphere HA or Site Recovery Manager (SRM) |

| Edge Cluster |

|

| Compute Cluster | N/A |

From a "storage" perspective the NSX component protection is explained in the tables below:

| vSAN | FTT=0 | |

|---|---|

| Management Cluster | NSX Manager Protected by vSphere Replication and Site Recovery Manager (SRM) |

| Edge Cluster |

|

| Compute Cluster | N/A |

The calculation for understanding the required number of hosts for FTM=RAID1 is as follows:

- Where FTT=n: required hosts (or fault domains) =2n+1

| RAID-1 (FTT=1) | |

|---|---|

| Management Cluster |

|

| Edge Cluster |

|

| Compute Cluster | N/A |

| RAID-1 (FTT=2) | |

|---|---|

| Management Cluster |

|

| Edge Cluster |

|

| Compute Cluster | N/A |

| RAID-1 (FTT=3) | |

|---|---|

| Management Cluster |

|

| Edge Cluster |

|

| Compute Cluster | N/A |

The calculation for understanding the required number of hosts for FTM=RAID5/6 is as follows:

- Where FTT=n: required hosts (or fault domains) =2n+2

- Using the calculation above, we see that RAID5 will require 4 hosts or fault domains – the VMDK is split into 3 chunks, then there is 1 parity bit written, equating to 4 required hosts or fault domains.

- Using the calculation above, we see that RAID6 will require 6 hosts or fault domains – the VMDK is split into 4 chunks, then there are 2 parity bits written, equating to 6 required hosts or fault domains.

| RAID-5 (FTT=1) | |

|---|---|

| Management Cluster |

|

| Edge Cluster |

|

| Compute Cluster | N/A |

| RAID-6 (FTT=2) | |

|---|---|

| Management Cluster |

|

| Edge Cluster |

|

| Compute Cluster | N/A |